AI and Professional Voice Care: Journal of Voice 30 Years at the Frontier

Today we stand at a true inflection point in voice science—a moment when AI is fundamentally reshaping how we conduct research, analyze data, and care for patients.

The Voice Foundation: A Legacy of Innovation

This moment of transformation builds on a rich legacy. In 1969, Dr. Wilbur James Gould founded The Voice Foundation in New York City at a time when interdisciplinary care of the human voice was virtually nonexistent. Dr. Gould’s groundbreaking foresight brought together physicians, scientists, speech-language pathologists, performers, and teachers to share their knowledge and expertise in the care of the professional voice user.

The Foundation held its first Annual Symposium—Care of the Professional Voice—in 1972, followed by its first Gala (later named Voices of Summer) in 1973. For over five decades, we have been building bridges between art and science, between the clinic and the stage.

Since 1989, The Voice Foundation has been led by Dr. Robert Thayer Sataloff, an internationally renowned otolaryngologist who is also a professional singer and conductor. Dr. Sataloff has authored more than 1,200 publications, including 79 textbooks. Under his leadership, the Foundation moved to Philadelphia and has continued to advance understanding of the voice through interdisciplinary scientific research and education.

Today, our annual Symposium in Philadelphia draws hundreds of medical, scientific, academic, and speech-language professionals, as well as performing artists from around the world. And we publish the Journal of Voice—the premier peer-reviewed journal dedicated to voice science and medicine.

30 Years of AI Research in Voice Science

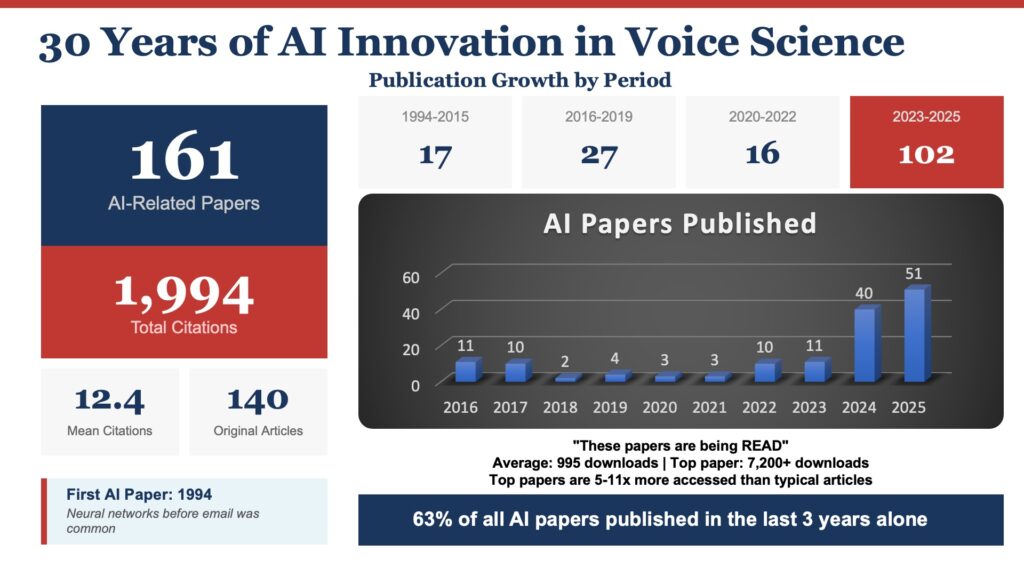

The Journal of Voice has been publishing AI research longer than most people realize. In 1994—thirty-one years ago—we published a paper by Rihkanen and colleagues titled “Spectral Pattern Recognition of Improved Voice Quality.” This paper used neural networks to analyze voice. That was the same year the World Wide Web was just emerging into public consciousness. We were publishing AI research in voice science before most people had ever sent an email.

Fast forward to today, and the numbers are striking:

- 161 AI-related papers published in the Journal of Voice

- 1,994 citations to this body of work

- An average of 12.4 citations per paper

But here’s the statistic that truly captures the moment we’re in: 102 of those 161 papers—63 percent—were published in just the last three years. The publication timeline tells a dramatic story: 17 papers from 1994-2015, 27 papers from 2016-2019, 16 papers from 2020-2022, and then 102 papers from 2023-2025.

In 2025 alone, we’ve already published 51 AI-related papers. That’s more than we published in the entire first twenty years combined. This isn’t gradual growth. This is an exponential transformation of our field.

Beyond Citations: Real-World Impact

These papers aren’t just being cited—they’re being actively read and used by voice professionals around the world. Recent usage statistics reveal the extraordinary reach of this research.

The average AI paper in our journal has been downloaded nearly 1,000 times. Our most-accessed AI paper—a study on machine learning for COVID-19 detection—has been downloaded over 7,200 times, eleven times the median for articles in its issue.

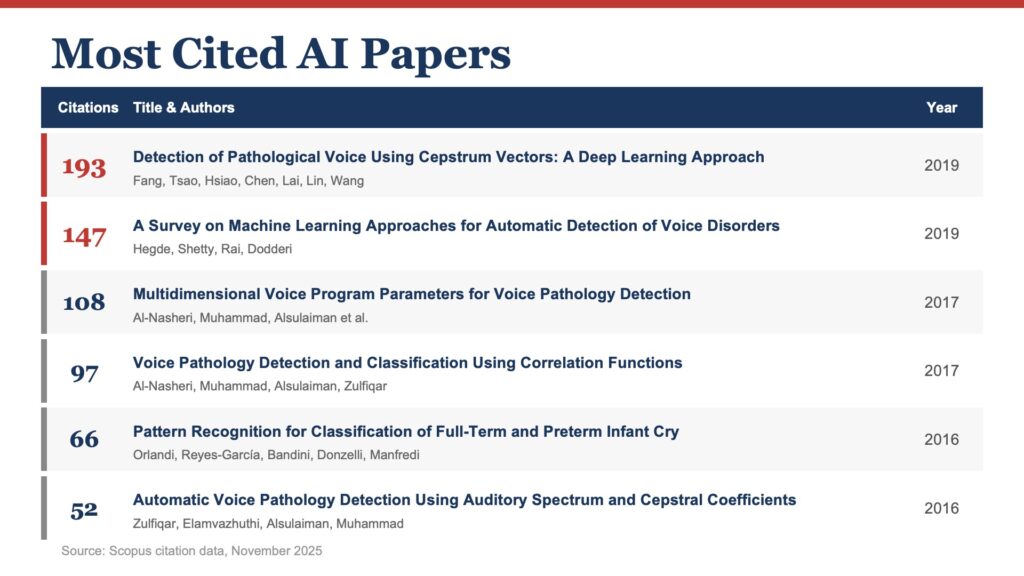

The two most-cited papers—Fang and colleagues’ deep learning study (193 citations) and Hegde’s machine learning survey (147 citations)—have been downloaded nearly 5,000 times each. Both were among the top three most-accessed articles in their respective issues. These aren’t just academic citations. These are clinicians, researchers, and speech-language pathologists seeking practical tools to advance voice care.

Landmark Research Reshaping the Field

Deep Learning for Voice Pathology Detection

Fang and colleagues’ 2019 study, “Detection of Pathological Voice Using Cepstrum Vectors: A Deep Learning Approach,” remains the most-cited AI paper in our journal’s history with 193 citations. This work demonstrated that deep neural networks could detect voice pathology with remarkable accuracy using acoustic features that the human ear cannot consciously perceive.

Hegde and colleagues’ comprehensive “Survey on Machine Learning Approaches for Automatic Detection of Voice Disorders” (147 citations) mapped the landscape of machine learning in voice science and has become essential reading for anyone entering this space. Al-Nasheri and colleagues contributed two highly-cited papers in 2017: one on Multidimensional Voice Program parameters (108 citations) and another on correlation functions for voice pathology detection (97 citations).

The research has evolved rapidly. Chen and Chen’s 2022 paper on deep neural networks for voice classification, Fujimura’s work on one-dimensional convolutional neural networks, and Cho and Choi’s comparison of CNN models for laryngoscopic images—each building on what came before, each pushing the boundaries of what’s possible.

Voice as Digital Biomarker

Perhaps the most transformative concept emerging from recent research is the idea of voice as a digital biomarker—a non-invasive window into systemic health.

We’ve published groundbreaking work on using voice analysis to detect and monitor Parkinson’s disease. Hemmerling and Wójcik-Pędziwiatr’s 2022 paper on predicting Parkinson’s severity from voice signals has garnered 29 citations in just three years, with more recent work pushing this even further toward clinical application.

But the applications extend beyond neurological conditions. Recent systematic reviews explore voice quality as a digital biomarker for depression and bipolar disorder. The implications are profound: the possibility of detecting mental health changes through routine voice analysis, enabling earlier intervention, and reducing the burden on overstretched mental health systems.

Research on using machine learning to assess COVID-19 from voice characteristics demonstrates how voice can serve as a sentinel for respiratory health.

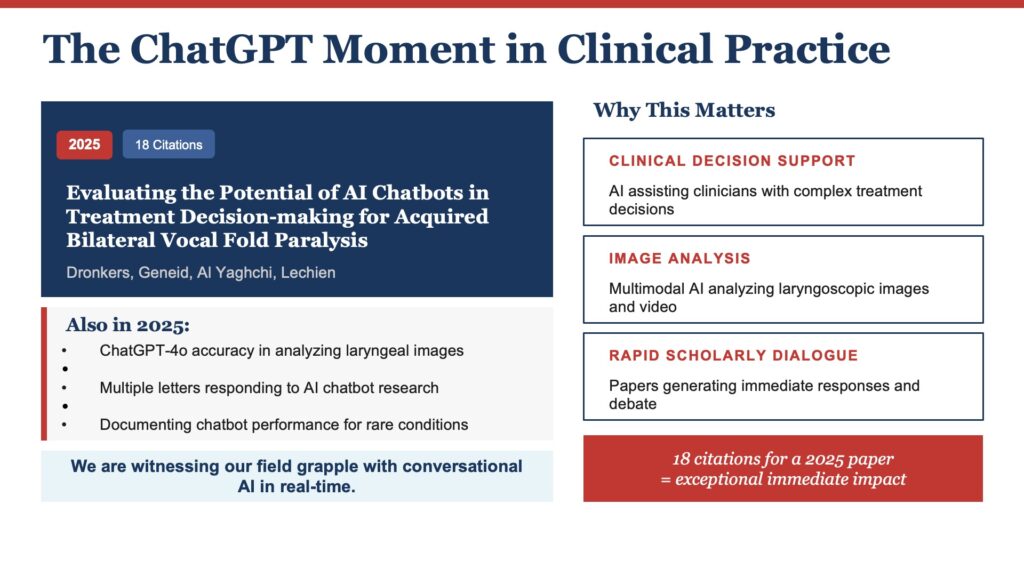

AI Chatbots in Clinical Practice

In 2025, we’ve published a series of papers examining AI chatbots in clinical decision-making. Dronkers and colleagues’ paper on “Evaluating the Potential of AI Chatbots in Treatment Decision-making for Acquired Bilateral Vocal Fold Paralysis” has already accumulated 18 citations—exceptional for a paper published in 2025, demonstrating immediate scholarly impact.

This work generated multiple letters to the editor and response papers, including research on ChatGPT-4o’s accuracy in analyzing laryngeal images. We are witnessing, in real-time, our field grappling with the implications of conversational AI in clinical settings.

Expert Perspectives on AI in Voice Science

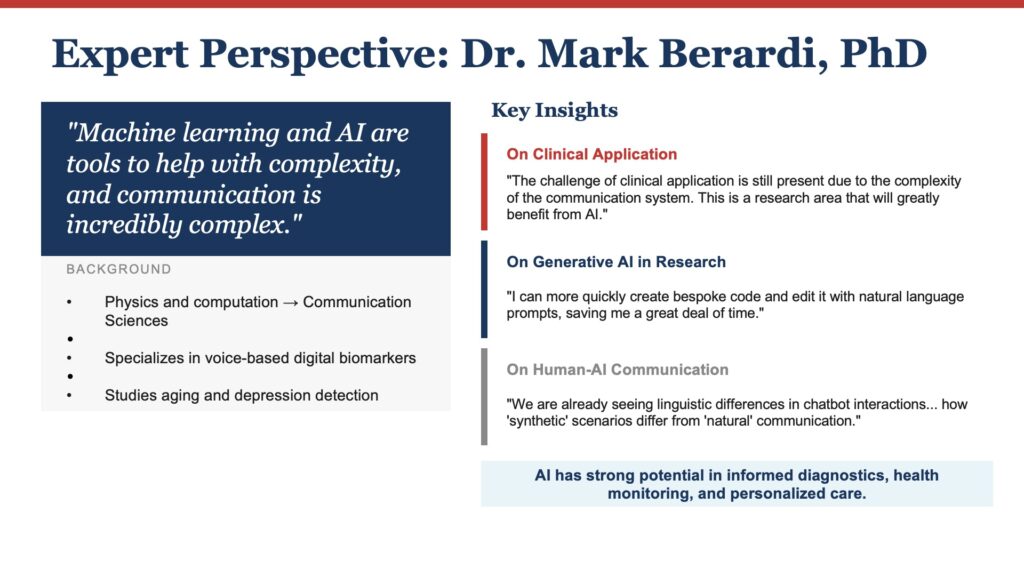

Dr. Mark Berardi: AI as a Tool for Complexity

Dr. Mark Berardi brings a unique perspective to voice science, having come from physics and computation. He sees AI as fundamentally a tool for managing complexity: “Machine learning and AI are tools to help with complexity, and communication is incredibly complex in its neurobiological and physiological processes. So I think the application is warranted.”

His research focuses on voice-based digital biomarkers for aging and depression. “Acquiring speech and voice signals is relatively easy now, and we have shown potential for extracting meaningful health information,” he notes. The challenge lies in “the complexity of the communication system” itself—but this is precisely where AI excels.

On generative AI, Dr. Berardi offers a nuanced view. While he hasn’t yet seen it create “a major change to research,” he recognizes its potential to “address some of the bottlenecks we have in research”—particularly in coding and data processing. He can now “quickly create bespoke code and edit it with natural language prompts.”

Perhaps most intriguingly, Dr. Berardi is studying human-AI communication itself—how we adapt our speech when talking to chatbots versus humans, how “synthetic” communication scenarios like Zoom calls differ from face-to-face interaction. “We are already seeing linguistic differences in chatbot interactions,” he notes. The field may need to expand to understand not just human voice, but human voice in conversation with machines.

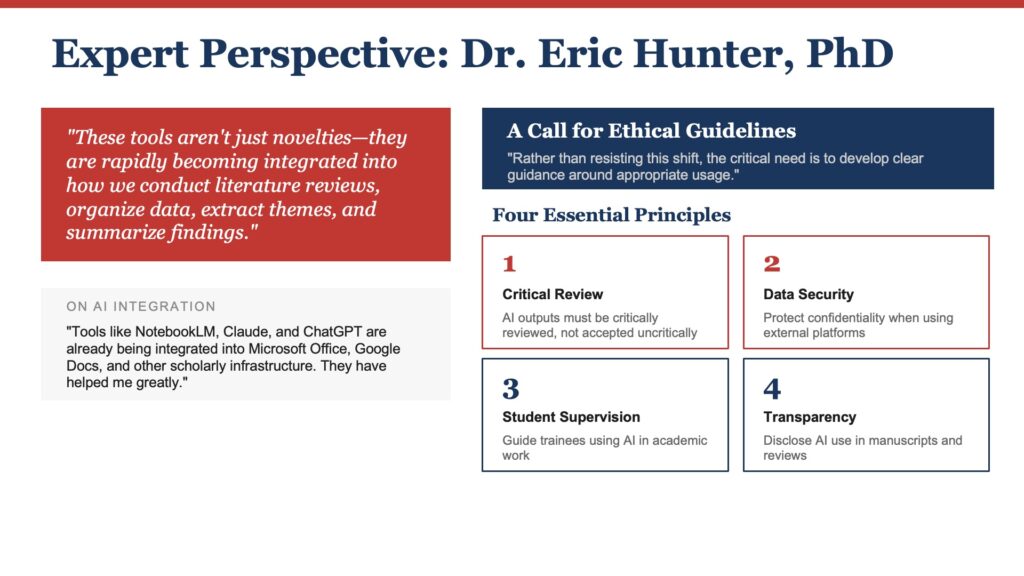

Dr. Eric Hunter: Embracing Change with Ethical Clarity

Dr. Eric Hunter emphasizes the institutional implications of AI integration. “These tools aren’t just novelties,” he observes. “They are rapidly becoming integrated into how we conduct literature reviews, organize data, extract themes from large bodies of text, and even summarize findings.”

He points to an inescapable reality: “Large language models and AI-driven platforms are not going away. In fact, we should expect them to become increasingly embedded within common academic workflows—whether through journal article preparation, manuscript reviewing, data analysis, or collaborative writing.”

Tools like NotebookLM, Claude, and ChatGPT are already being integrated into Microsoft Office, Google Docs, and other scholarly infrastructure. But Dr. Hunter issues a crucial call to action: “Rather than resisting this shift, the critical need is to develop clear guidance around appropriate usage—for both authors and reviewers.”

He outlines four essential principles:

- Ensuring that any AI-generated outputs are critically reviewed and not accepted uncritically

- Protecting data security and confidentiality when uploading sensitive content to external platforms

- Supervising students and trainees who may use AI tools as part of their academic work

- Transparently disclosing AI use when preparing manuscripts or review comments

“Our field will benefit most,” Dr. Hunter concludes, “if we embrace the productivity these tools offer while also building a shared ethical framework for their responsible use.”

A Global Research Community

The AI voice research community extends far beyond individual labs. Leading authors and co-authors of AI research in the Journal of Voice include Jérôme René Lechien (6 papers), Dimitar Deliyski (5 papers), Stephanie Zacharias (5 papers), and Ahmed Yousef (4 papers as first author), along with Paavo Alku, Leonardo Wanderley Lopes, and Maryam Naghibolhosseini (4 papers each). These researchers, along with dozens of others from institutions spanning every continent, are pioneering the application of artificial intelligence to voice science.

This is not the work of a few isolated labs. This is a global community, united by a shared commitment to understanding the human voice through the tools of machine learning and AI.

The Path Forward

The Journal of Voice will continue to be the platform for this evolving science. We remain committed to publishing rigorous research that pushes boundaries while maintaining the highest standards of scientific integrity.

But the field faces important challenges that require collective action:

First, we must engage with AI tools thoughtfully. These are tools for managing complexity. We need to learn what they can do, understand their limitations, and use them to amplify our expertise—not replace our judgment.

Second, we must participate in developing ethical guidelines. Dr. Hunter’s call for “a shared ethical framework” cannot be answered by any one institution or journal. It requires our entire community working together.

Third, we must continue to publish and share our work. The 161 papers in our journal represent just the beginning. Every clinician, researcher, and educator has insights that can advance our collective understanding.

An Extraordinary Moment

The human voice has been our instrument of connection, expression, and identity for hundreds of thousands of years. It carries our emotions, our health, our very selves in ways that no other signal can match.

Now, for the first time in history, we have tools sophisticated enough to begin truly understanding that complexity—to decode what the voice reveals about our brains, our bodies, our wellbeing. We have tools that can extend the reach of expert clinicians, that can detect subtle changes before they become serious problems, that can democratize access to voice care around the world.

This is an extraordinary moment. And the Journal of Voice stands at the center of it. The next decade promises even greater discoveries. We look forward to sharing them with you.

About the Author

Ian DeNolfo is Executive Director of The Voice Foundation which publishes the Journal of Voice. A graduate of The Juilliard School and The Curtis Institute of Music, he formerly performed as a leading tenor at opera houses worldwide before transitioning to The Voice Foundation.